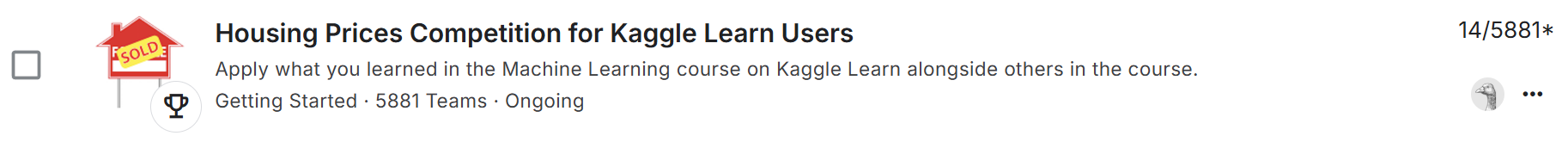

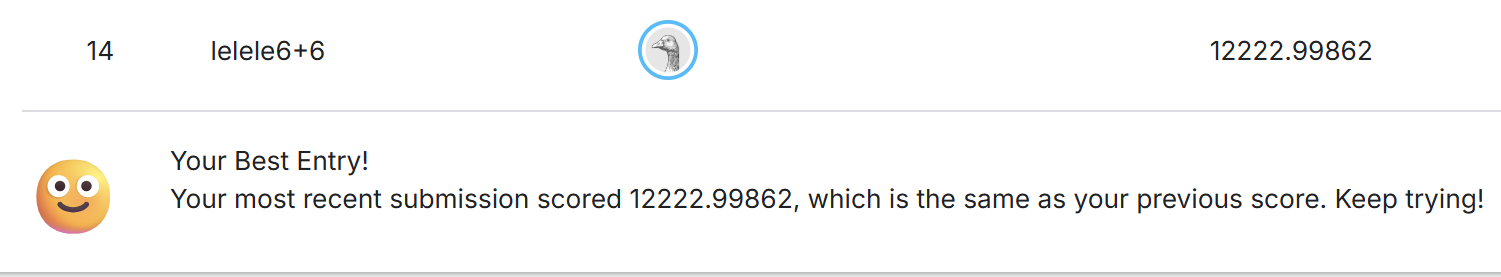

Achieving Top 0.25% in Kaggle House Price Prediction

Introduction

Predicting house prices is a classic machine learning task that requires careful feature engineering and model design. I participated in the Kaggle “House Prices: Advanced Regression Techniques” competition and ranked in the top 0.25% out of around 6,000 participants.

Project Highlights

- Extensive Feature Engineering: Created 50+ new features, including polynomial features and interaction terms, to capture complex relationships.

- Model Development: Implemented and fine-tuned Linear Regression, Random Forest, XGBoost, and Neural Networks.

- Stacked Ensemble: Built an ensemble model combining the best-performing individual models to maximize accuracy.

Impact

This project highlights my ability to design end-to-end ML pipelines, from feature engineering to ensemble modeling, and achieve competitive results in a large-scale machine learning competition.